Attack Impact on E2E AD Model

End-to-end (E2E) autonomous driving models are increasingly popular, often incorporating online map construction as a key module. We extend our experiments by launching Road Straightening and Early Turn attacks on VAD, a widely used E2E model, across 100 asymmetric scenes. Results demonstrate that our proposed attacks not only compromise dedicated online map construction models but also significantly degrade both map perception and planning performance in E2E autonomous driving systems.

| Setting | Map Metrics (%) | Planning Metric (m) | |||

|---|---|---|---|---|---|

| AP_boundary | AP_divider | AP_ped | mAP | avg. L2 distance | |

| Clean | 45.6 | 58.2 | 48.7 | 50.8 | 0.77 |

| Road Straightening Attack | |||||

| Blinding | 21.1 | 22.8 | 22.8 | 22.2 | 3.71 |

| Adv. patch | 16.1 | 22.3 | 19.8 | 19.4 | 3.69 |

| Early Turn Attack | |||||

| Blinding | 22.1 | 28.3 | 25.6 | 25.3 | 3.70 |

| Adv. patch | 15.4 | 24.1 | 21.9 | 20.4 | 3.71 |

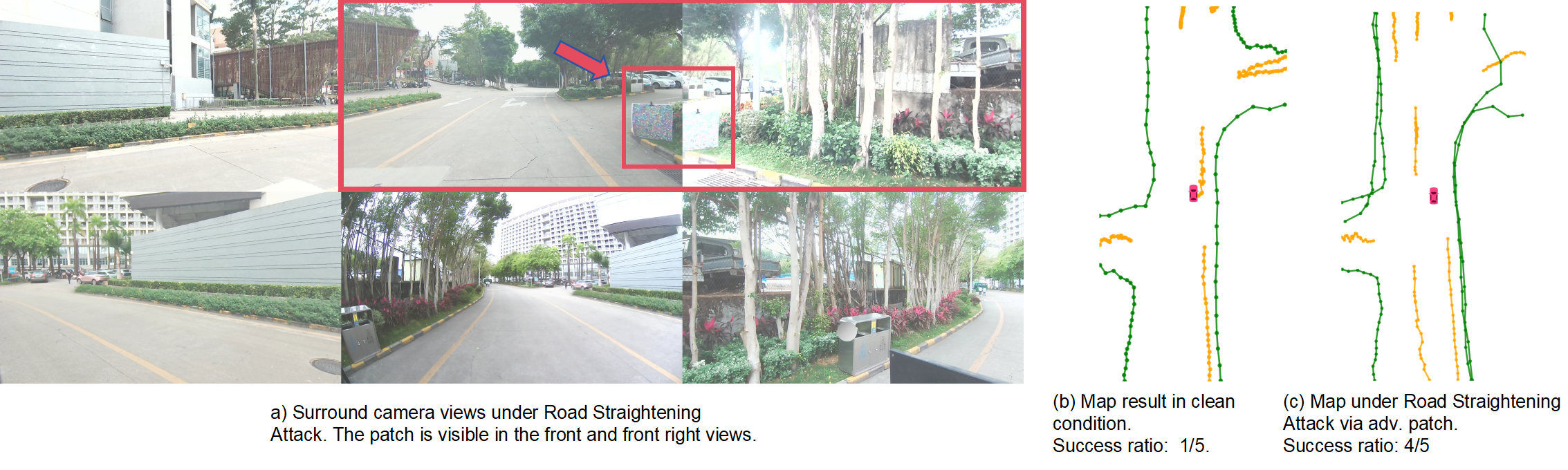

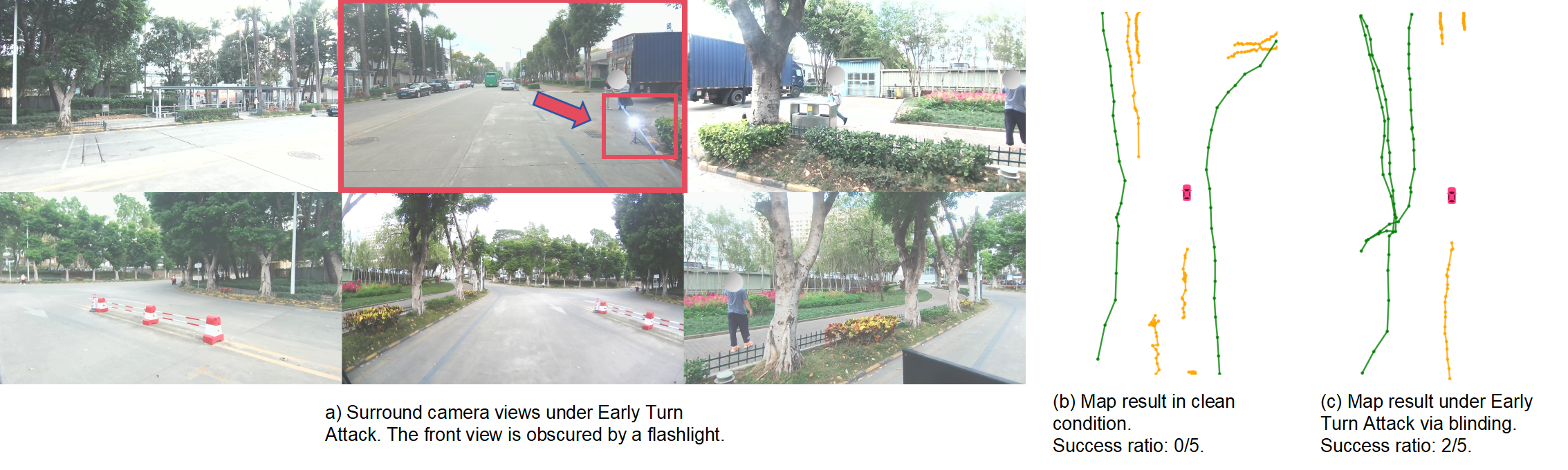

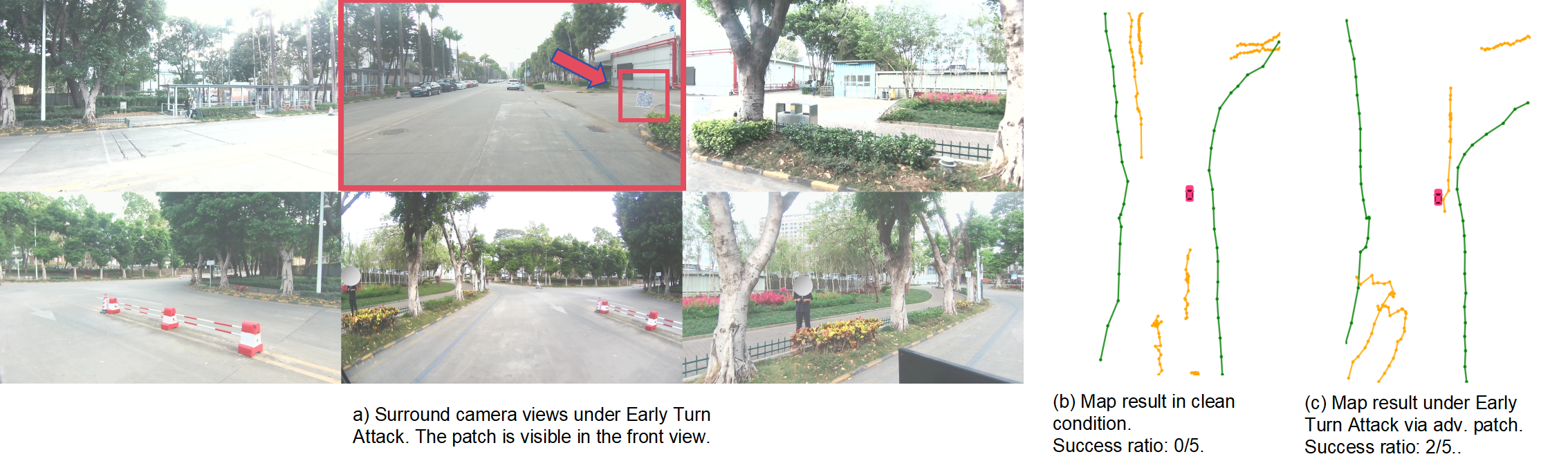

Visualization Example

Figure 1: Clean scenario. The victim AV successfully makes a left turn at the fork.

Figure 2: Road Straightening Attack (RSA) via flashlight. The E2E model (VAD) predicts a straight road and plans to continue straight.